Recently I had to make a scientific poster for the Berkeley neuroscience retreat. I had asked my lab mates what they used to create posters. Most of them, I think, used PowerPoint, which I can't use since I'm on Linux. Using LibreOffice Impress also seemed like a pain. And I really wanted my poster to be in PDF format.

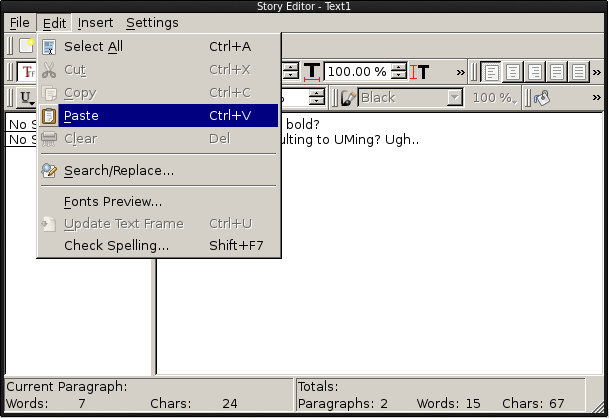

So I stumbled upon using Scribus, which is used for desktop publishing. Scribus can create print-ready PDFs and has facilities for wrapping text around images. I used it for about a week until I finally gave up. It turns out that Scribus is a real PITA to use. Laying out text with the story editor is irritating to say the least. For example, if you try to emphasize text like this in the story editor, you can't see it within the story editor. On top of that, you have to select all the text you want to change, like if I wanted to change from Arphic Uming to Courier or whatever, I have to select everything. But because the font's not automatically previewed within the story editor, you don't realize that you've changed absolutely nothing by using the drop-down menu. There's also no undo history as far as I can tell, which is probably why it's recommended to edit your text in a .txt file first.

Josh Moller-Mara

Josh Moller-Mara